The problem

I have a linked server connection between 2 SQL servers approx half the world away. One server resides in Sydney, Australia and the other in London, UK.

I was getting terrible performance.

To simulate this for testing purposes, on my home internet connection, I created an Azure database in Northern Europe and my local SQL server is in Sydney.

I used the DimDate table as created in this code project example. http://www.codeproject.com/Articles/647950/Create-and-Populate-Date-Dimension-for-Data-Wareho

(This is a 36 column table)

I ran this against my azure database and populated it with 45,656 records, (days between 1-Jan-1974 to 31-Dec-2099, just adjust the populate script)

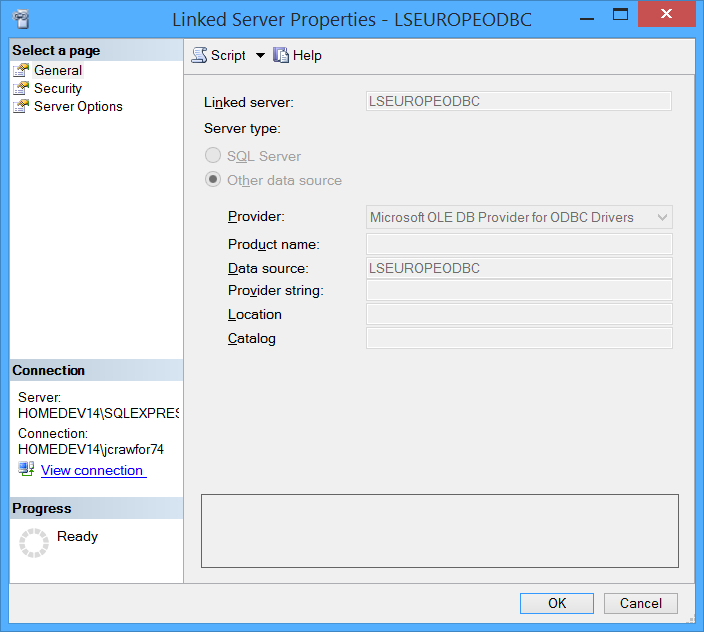

On my local sql server, I have a Linked Server connection called LSEUROPE set to use SQLNCLI – SQL Native Client, configured to connecting to the Azure database in Europe.

On the local sql server:

1. Download and Install Wireshark on the local server, https://www.wireshark.org/download.html

2. Download and Install Compass wildpackets free from, http://download.cnet.com/Compass-Free/3000-2085_4-75447541.html. This is used to visualise the throughput.

Wireshark was enabled and set to monitor only the remote server, (capture filter – “host 10.10.0.1” where 10.10.0.1 is the IP address of the remote server).

2 tests were executed:

Test 1 – SSMS Direct

1. Open SSMS and connect directly to the REMOTE server.

2. Start Wireshark Capture

3. execute “select * from dbo.DimDate”

4. once finished stop wireshark

5. Save the wireshark session as a .pcap file, (compass only opens .pcap files)

Test 2 – Linked Server

1. Open SSMS and connect to the LOCAL server.

2. Start Wireshark Capture

3. execute “select * from LSEUROPE.TestDB.dbo.DimDate”, (Note: OpenQuery in this instance makes no difference as all we are testing is the throughput of transferring data from the other side of the world.)

4. once finished stop wireshark

5. Save the wireshark session as a .pcap file.

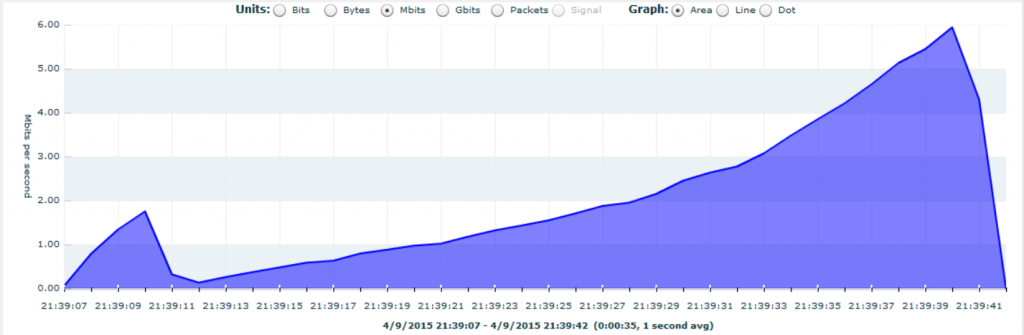

Fig 1. SSMS Direct Test – 35 sec

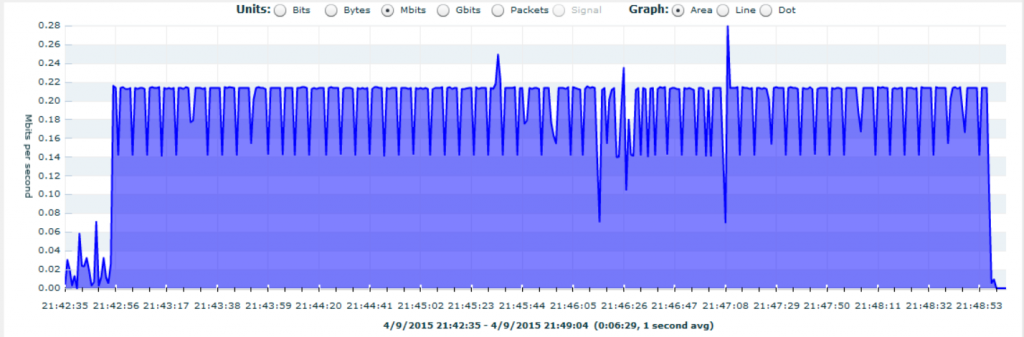

Fig 2. Linked Server Test – 6m 29 sec

As you can see, throughput on the Linked server query is limited to 0.22 Mbits/s, whilst the remote SSMS query happily averages around 3Mbits/s (again, this is just on my standard ADSL2+ home internet connection, but I have replicated this exact same pattern at work on a 20MBps dedicated WAN link).

There is some bottle neck that is slowing performance of the data being sent via a linked server connection!!

A solution of sorts

After much playing and testing of the various Linked server connections I have managed to get the following results over a linked server connection.

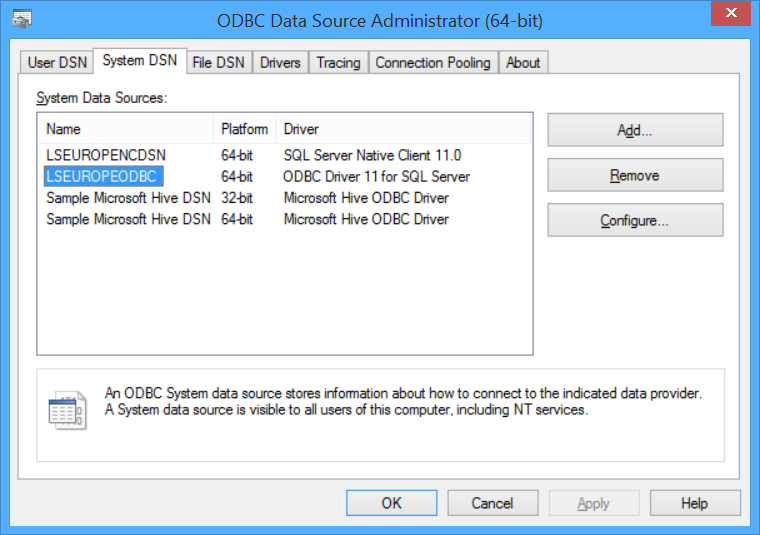

Figure 4 was obtained by creating a ODBC System DSN of type ODBC Driver 11 for SQL Server. This provided the fastest performance, bringing down the same 45,656 rows in 27 seconds.

Figure 3 was obtained by creating the ODBC System DSN using the SQL Native Client. It is now approx 46 seconds which is much better than 6 minutes, but still not the 27 seconds of the ODBC driver.

After multiple runs I was getting similar performance regardless of the Client used in the DSN

In both cases Fig 3 and Fig 4, the linked server was created using the “Microsoft OLE DB Provider for ODBC Drivers” and configured the Linked server to use the System DSN.

Now the real question, why does this seem to work?

I am going to test using this as I am sure there will be synax type issues of changing the linked server connection, (I think ODBC doesn’t handle TEXT/NTEXT fields)

Microsoft, what is going on here?

I am going to be trying to find out what the reason is for the poor performance of the “Microsoft OLEDB provider of SQL Server”, and if there is something that can be done to improve the performance.

Were you able to see any difference in Packet Size distribution between the OleDB and ODBC test cases using Wireshark?

Hi Stuart,

I am no expert in reading the details of wireshark packages but from what I can see the two traces are very similar in the number and type of packets that are delivered.

ODBC – 17,014 packets – 9,406,662 bytes – Duration 22 seconds

SNCLI – 17,380 packets – 9,551,573 bytes – Duration approx 6m 7 sec

I have checked the TCP packets and it just seems on the SNCLI connection the rate at which the TCP packets are delivered is much slower, which leads to the overall difference in time?